/cdn.vox-cdn.com/uploads/chorus_image/image/65259743/acastro_180730_1777_facial_recognition_0001.0.jpg)

Modern artificial intelligence is often lauded for its growing sophistication, but mostly in doomer terms. If you’re on the apocalyptic end of the spectrum, the AI revolution will automate millions of jobs, eliminate the barrier between reality and artifice, and, eventually, force humanity to the brink of extinction. Along the way, maybe we get robot butlers, maybe we’re stuffed into embryonic pods and harvested for energy. Who knows.

But it’s easy to forget that most AI right now is terribly stupid and only useful in narrow, niche domains for which its underlying software has been specifically trained, like playing an ancient Chinese board game or translating text in one language into another.

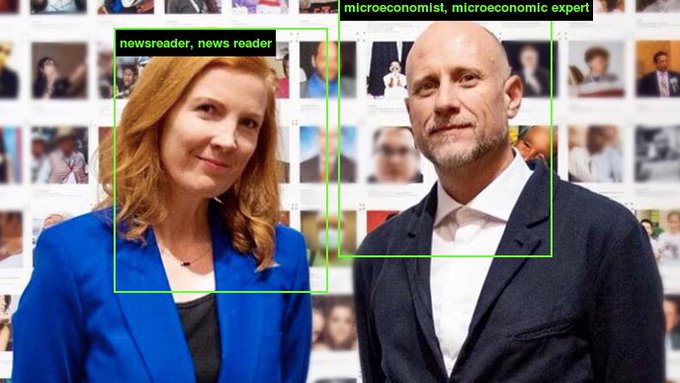

Ask your standard recognition bot to do something novel, like analyze and label a photograph using only its acquired knowledge, and you’ll get some comically nonsensical results. That’s the fun behind ImageNet Roulette, a nifty web tool built as part of an ongoing art exhibition on the history of image recognition systems.

As explained by artist and researcher Trevor Paglen, who created the exhibit Training Humans with AI researcher Kate Crawford, the point is not to make a judgement about AI, but to engage with its current form and its complicated academic and commercial history, as grotesque as it might be.

“When we first started conceptualizing this exhibition over two years ago, we wanted to tell a story about the history of images used to ‘recognize’ humans in computer vision and AI systems. We weren’t interested in either the hyped, marketing version of AI nor the tales of dystopian robot futures,” Crawford told the Fondazione Prada museum in Milan, where Training Humans is featured. “We wanted to engage with the materiality of AI, and to take those everyday images seriously as a part of a rapidly evolving machinic visual culture. That required us to open up the black boxes and look at how these ‘engines of seeing’ currently operate.”

It’s a worthy pursuit and a fascinating project, even if ImageNet Roulette represents the goofier side of it. That’s mostly because ImageNet, a renown training data set AI researchers have relied on for the last decade, is generally bad at recognizing people. It’s mostly an object recognition set, but it has a category for “People” that contains thousands of subcategories, each valiantly trying to help software do the seemingly impossible task of classifying a human being.

And guess what? ImageNet Roulette is super bad at it.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19206093/Screen_Shot_2019_09_16_at_4.16.11_PM.png)

I don’t even smoke! But for some reason, ImageNet Roulette thinks I do. It also appears to believe that I am located in an airplane, although to its credit, open office layouts are only slightly less suffocating than narrow metal tubes suspended tens of thousands of feet in the air.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19206095/Screen_Shot_2019_09_16_at_4.05.53_PM.png)

ImageNet Roulette was put together by developer Leif Ryge working under Paglen, as a way to let the public engage with the art exhibition’s abstract concepts about the inscrutable nature of machine learning systems.

Here’s the behind-the-scenes magic that makes it tick:

Part of the project is also to highlight the fundamentally flawed, and therefore human, ways that ImageNet classifies people in “problematic” and “offensive” ways. (One interest example popping up on Twitter is that some men uploading photos appear to be randomly tagged as “rape suspect” for reasons unexplained.) Paglen says this is crucial to one of the themes the project is highlighting, which is the fallibility of AI systems and the prevalence of machine learning bias as a result of its compromised human creators:

Want to see how an AI trained on ImageNet will classify you? Try ImageNet Roulette, based on ImageNet's Person classes. It's part of the 'Training Humans' exhibition by @trevorpaglen & me - on the history & politics of training sets. Full project out soonimagenet-roulette.paglen.com

ImageNet is one of the most significant training sets in the history of AI. A major achievement. The labels come from WordNet, the images were scraped from search engines. The 'Person' category was rarely used or talked about. But it's strange, fascinating, and often offensive.

16 people are talking about this

Although ImageNet Roulette is a fun distraction, the underlying message of Training Humans is a dark, but vital, one.

“Training Humans explores two fundamental issues in particular: how humans are represented, interpreted and codified through training datasets, and how technological systems harvest, label and use this material,” reads the exhibition description “As the classifications of humans by AI systems becomes more invasive and complex, their biases and politics become apparent. Within computer vision and AI systems, forms of measurement easily — but surreptitiously — turn into moral judgments.”Source:https://www.theverge.com

No comments:

Post a Comment