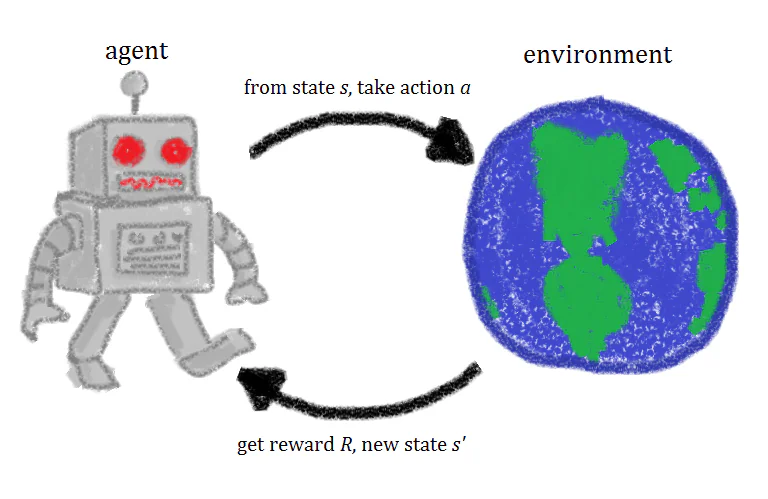

Put simply, reinforcement learning is a machine learning technique that involves training an artificial intelligence agent through the repetition of actions and associated rewards. A reinforcement learning agent experiments in an environment, taking actions and being rewarded when the correct actions are taken. Over time, the agent learns to take the actions that will maximize its reward. That’s a quick definition of reinforcement learning, but taking a closer look at the concepts behind reinforcement learning will help you gain a better, more intuitive understanding of it.

Reinforcement In Psychology

The term “reinforcement learning” is adapted from the concept of reinforcement in psychology. For that reason, let’s take a moment to understand the psychological concept of reinforcement. In the psychological sense, the term reinforcement refers to something that increases the likelihood that a particular response/action will occur. This concept of reinforcement is a central idea of the theory of operant conditioning, initially proposed by the psychologist B.F. Skinner. In this context, reinforcement is anything that causes the frequency of a given behavior to increase. If we think about possible reinforcement for humans, these can be things like praise, a raise at work, candy, and fun activities.

In the traditional, psychological sense, there are two types of reinforcement. There’s positive reinforcement and negative reinforcement. Positive reinforcement is the addition of something to increase a behavior, like giving your dog a treat when it is well behaved. Negative reinforcement involves removing a stimulus to elicit a behavior, like shutting off loud noises to coax out a skittish cat.

Positive and Negative Reinforcement In Machine Learning

Positive reinforcement increases the frequency of a behavior while negative reinforcement decreases the frequency. In general, positive reinforcement is the most common type of reinforcement used in reinforcement learning, as it helps models maximize the performance on a given task. Not only that but positive reinforcement leads the model to make more sustainable changes, changes which can become consistent patterns and persist for long periods of time.

In contrast, while negative reinforcement also makes a behavior more likely to occur, it is used for maintaining a minimum performance standard rather than reaching a model’s maximum performance. Negative reinforcement in reinforcement learning can help ensure that a model is kept away from undesirable actions, but it can’t really make a model explore desired actions.

Training A Reinforcement Agent

When a reinforcement learning agent is trained, there are four different ingredients or states used in the training: initial states (State 0), new state (State 1), actions, and rewards.

Imagine that we are training a reinforcement agent to play a platforming video game where the AI’s goal is to make it to the end of the level by moving right across the screen. The initial state of the game is drawn from the environment, meaning the first frame of the game is analyzed and given to the model. Based on this information, the model must decide on an action.

During the initial phases of training, these actions are random but as the model is reinforced, certain actions will become more common. After the action is taken the environment of the game is updated and a new state or frame is created. If the action taken by the agent produced a desirable result, let’s say in this case that the agent is still alive and hasn’t been hit by an enemy, some reward is given to the agent and it becomes more likely to do the same in the future.

This basic system is constantly looped, happening again and again, and each time the agent tries to learn a little more and maximize its reward.

Episodic vs Continuous Tasks

Reinforcement learning tasks can typically be placed in one of two different categories: episodic tasks and continual tasks.

Episodic tasks will carry out the learning/training loop and improve their performance until some end criteria are met and the training is terminated. In a game, this might be reaching the end of the level or falling into a hazard like spikes. In contrast, continual tasks have no termination criteria, essentially continuing to train forever until the engineer chooses to end the training.

Monte Carlo vs Temporal Difference

There are two primary ways of learning, or training, a reinforcement learning agent. In the Monte Carlo approach, rewards are delivered to the agent (its score is updated) only at the end of the training episode. To put that another way, only when the termination condition is hit does the model learn how well it performed. It can then use this information to update and when the next training round is started it will respond in accordance to the new information.

The temporal-difference method differs from the Monte Carlo method in that the value estimation, or the score estimation, is updated during the course of the training episode. Once the model advances to the next time step the values are updated.

Explore vs Exploit

Training a reinforcement learning agent is a balancing act, involving the balancing of two different metrics: exploration and exploitation.

Exploration is the act of collecting more information about the surrounding environment, while exploration is using the information already known about the environment to earn reward points. If an agent only explores and never exploits the environment, the desired actions will never be carried out. On the other hand, if the agent only exploits and never explores, the agent will only learn to carry out one action and won’t discover other possible strategies of earning rewards. Therefore, balancing exploration and exploitation is critical when creating a reinforcement learning agent.

Uses For Reinforcement Learning

Reinforcement learning can be used in a wide variety of roles, and it is best suited for applications where tasks require automation.

Automation of tasks to be carried out by industrial robots is one area where reinforcement learning proves useful. Reinforcement learning can also be used for problems like text mining, creating models that are able to summarize long bodies of text. Researchers are also experimenting with using reinforcement learning in the healthcare field, with reinforcement agents handling jobs like the optimization of treatment policies. Reinforcement learning could also be used to customize educational material for students.

Concluding Thoughts

Reinforcement learning is a powerful method of constructing AI agents that can lead to impressive and sometimes surprising results. Training an agent through reinforcement learning can be complex and difficult, as it takes many training iterations and a delicate balance of the explore/exploit dichotomy. However, if successful, an agent created with reinforcement learning can carry out complex tasks under a wide variety of different environments

Copyright https://www.unite.ai